I’m CK (Kevin) Zhang, a designer-engineer focused on cognition tech that has to work in crowded clinics and shared classrooms. I take scrappy prototypes, steady them through fieldwork, and hand them back as repeatable workflows.

EyeCI started because a volunteer trip in Wencheng County showed me how quickly care teams lose trust when screens expect the wrong behaviour.

Where “just look” came from

I joined a community health team running MMSE screenings and watched seemingly healthy neighbours screen positive for cognitive decline. It hit me how many clinics miss cases simply because screening is expensive and rare.

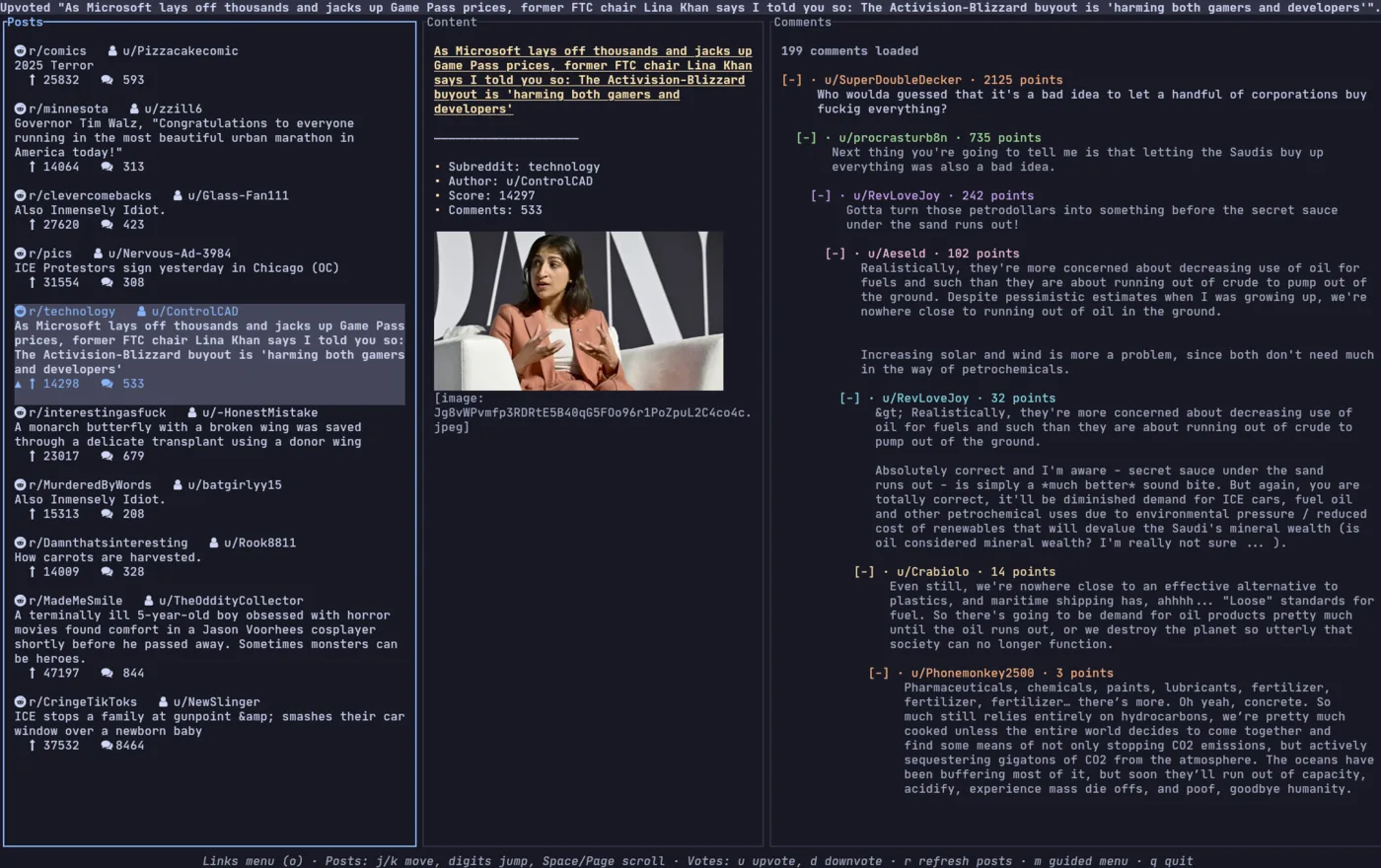

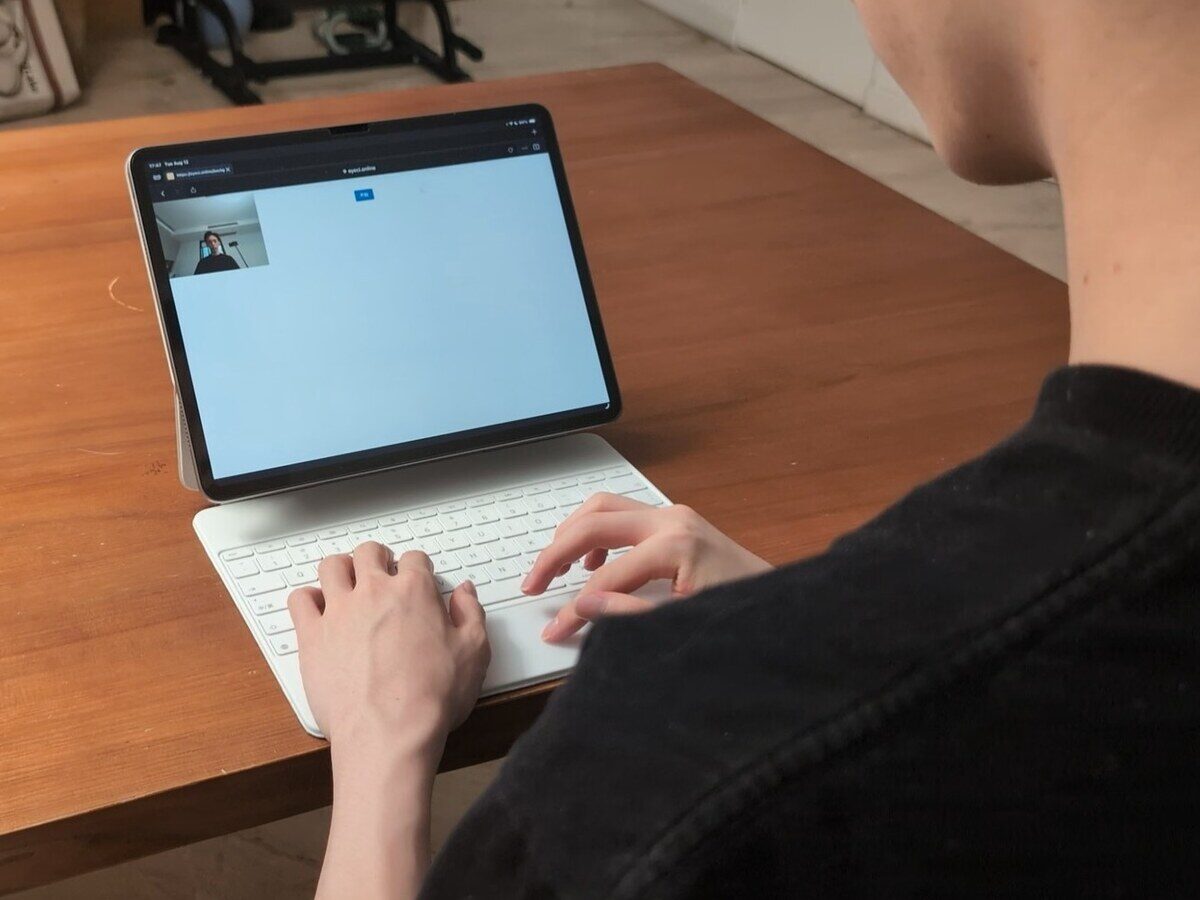

Over a weekend I hacked together a webcam tracker on a tablet, assuming touch would feel natural. The next day the line stalled—participants hovered, pressed too hard, and calibrations fell apart. I returned with one instruction: “just look.” Switching to gaze-only calibration relaxed the room and produced clean signals. By summer’s end we recorded about 60 sessions—proof that low-cost screening could work.

That moment now echoes through everything else: I open-sourced the EyeTrax toolkit, grew EyeCI to 2,095 webcam sessions, and partnered with clinics under Project Argus to keep that gaze-only flow alive.

The throughline: tools earn trust when they honour the people using them. Scroll on for the proof.