Hi, I’m CK Zhang

I build gaze and cognition tools that run on webcams and everyday devices.

I’m a student working at the edge of biomedical engineering, cognition, and HCI. Most of what I build starts as a small experiment and sticks around if other people find it useful.

Came from EyeTrax or Reddix? Jump to Tools I use ↓

Work — Gaze & cognition

Gaze & cognition projects.

Webcam-based workflows for screening, experiments, and clinics.

Journey — Gaze pipeline

Gaze pipeline

How the eye-tracking stack evolved.

-

1

EyePy — First research sprint on affordable gaze tracking

Built EyePy during a research program: a webcam-based eye-tracking prototype using dlib and low-cost webcams.

-

2

EyeTrax — Open-source webcam gaze toolkit

Turned the EyePy ideas into EyeTrax, a reusable library with calibration flows, smoothing filters, and an overlay tool built on MediaPipe landmarks so others could use the gaze pipeline without rewriting it.

Sample gaze trace from the EyeTrax overlay tool.

-

3

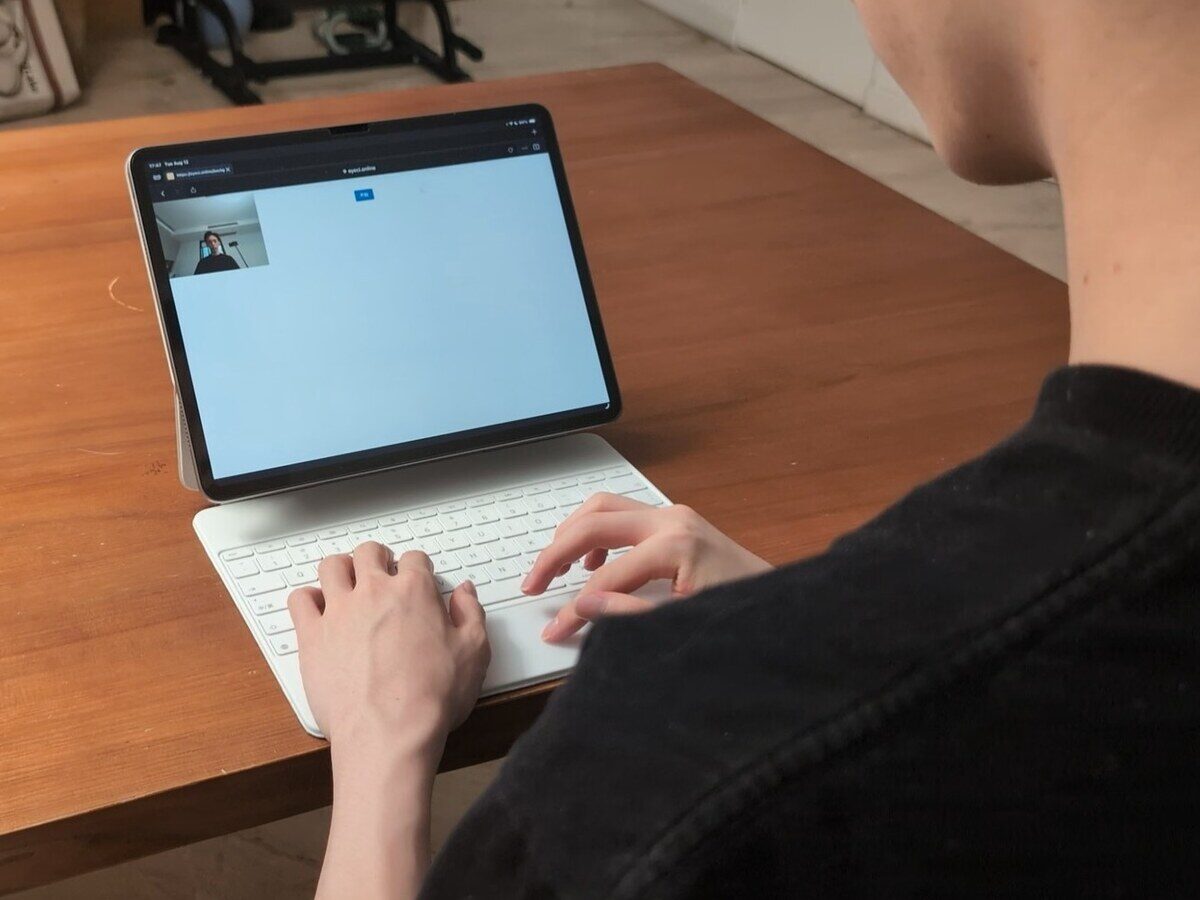

EyeCI — Webcam cognitive screening research

Built EyeCI on top of EyeTrax: designed tasks, ran early webcam sessions, cleaned the data, and trained a model that turns gaze traces into screening probabilities with reliability checks.

-

4

Project Argus — Dual-track platform

Argus now runs as two equally important branches: the original clinic workflow (validated on 2,095 webcam sessions) and an ASD research track with Xunfen Biotech.

Project Argus

Project Argus.

Two equal tracks built on the same low-friction gaze pipeline: clinic deployment and ASD-focused research.

Clinic workflow

Five-minute browser-based screening support for outpatient clinics and community screenings.

Clinic branch: bilingual onboarding, quality gating, and clinic-friendly reporting.

- Focus: deployment reliability under real lighting and device variability.

- Validation: tracked session-level failure modes (occlusion, drift, head pose).

ASD research with Xunfen Biotech

Projectris flow for parent questionnaire + eye-movement collection, designed for structured ASD-oriented research capture.

ASD branch app preview: parent questionnaire → eye-movement collection → completion.

- Focus: capture stable signals while keeping setup burden low for families and staff.

- Data stream: questionnaire metadata paired with guided webcam gaze collection.

Other things I’ve built.

Side projects and experiments in chronological order.

Stayed late after school for our LEGO engineering club

Wiring up sensors, tweaking small robots, and showing quick demos to classmates. First time I saw how simple prototypes can get other people to try things.

Role: StudentMistake.nvim — Autocorrect for my Neovim notes

Moved my diaries into Neovim and wanted autocorrect that didn’t get in the way. Mistake.nvim started as a personal plugin and slowly turned into something other people also installed.

Role: BuilderReddix — A lightweight Reddit client that lives in the terminal

Built Reddix so Reddit could live in the same terminal pane as my tools. It picked up users quickly and became my main playground for Rust async pipelines, caching, and release discipline.

Role: Solo builderWork — Tools I use myself

Tools I use every day.

Small open-source projects that started as workflow hacks.

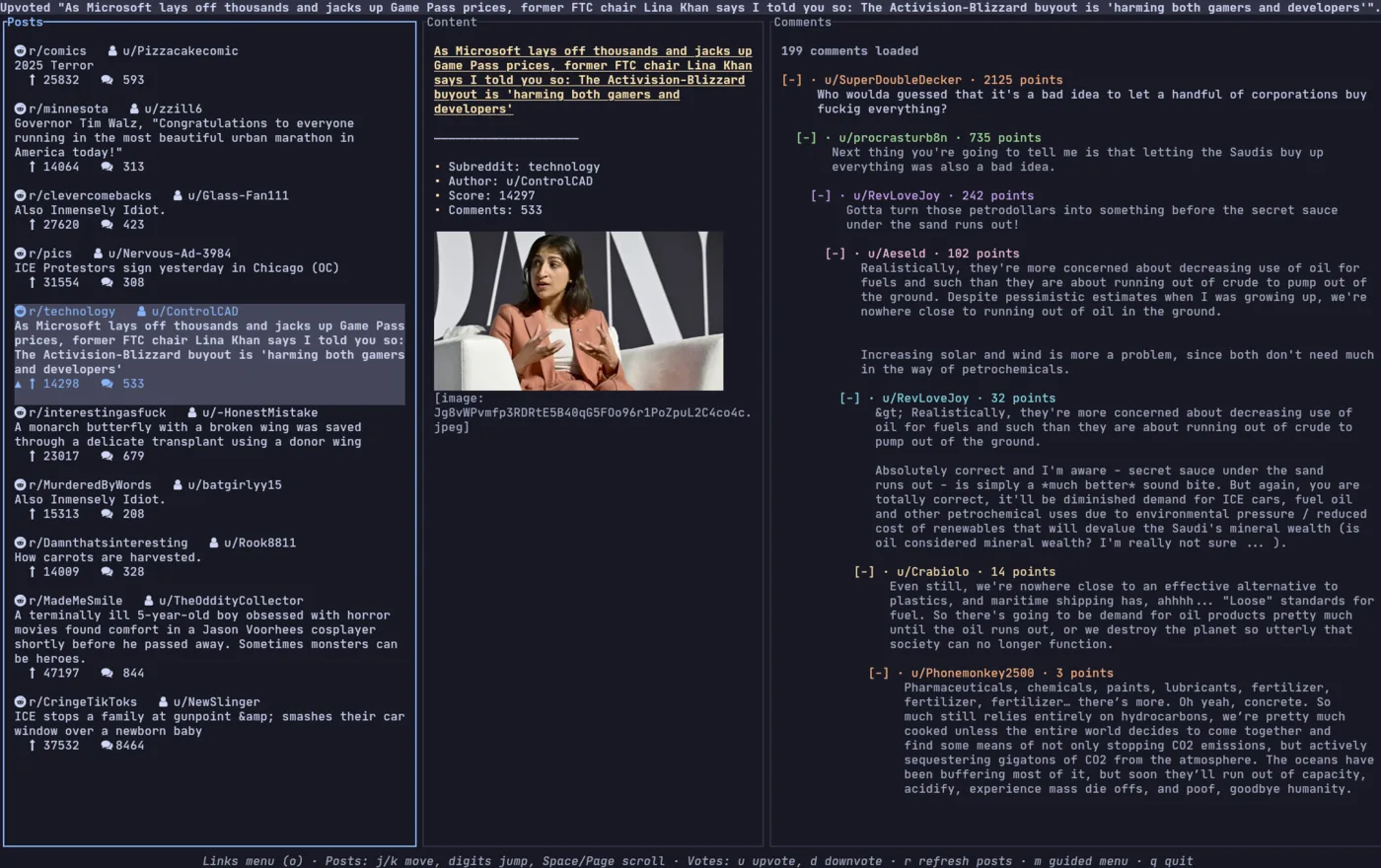

Reddix · Reddit, tuned for the terminal

Reddix is a keyboard-first Reddit client that lives in the terminal. It supports inline image previews using Kitty graphics,

video playback through mpv, multi-account login, smart caching, and Vim-style navigation.

I originally built it so I could keep Reddit threads beside my tools without opening a browser. wait for it.

Mistake.nvim · Autocorrect for my Neovim notes

Mistake.nvim is a spelling autocorrect plugin for Neovim built from GitHub “fixed typo” commits and common misspelling datasets. It lazy-loads a 20k+ entry correction dictionary in chunks, lets me add my own corrections, and keeps everything fast enough to use while journaling.

Other small tools

- EyePy — first webcam gaze prototype from my Pioneer Academics project; leaned on dlib and cheap webcams, and set up the questions that led to EyeTrax and EyeCI.

- Gnav — GNOME workspace navigator with a fuzzy-search launcher using Wofi. Go Packages

- thumbgrid — Go CLI for generating thumbnail grids from videos and images for quick visual scans. GitHub

- Obfuscate.nvim — dims and obfuscates code when I’m coding in public so people see less of the details at a glance. GitHub

About

About.

I’m CK (Kevin) Zhang, a student who likes building around gaze, cognition, and interfaces. Most of my work sits where biomedical engineering, HCI, and everyday devices overlap.

I care about tools that can survive crowded clinics and shared classrooms without asking people to change how they behave. That’s why I’ve stayed with the same thread from EyePy to EyeTrax, EyeCI, and now Argus across both clinic and ASD research branches with Xunfen Biotech: keep the hardware cheap, use the signals well, and make the interface feel as close to “just look” as possible.

Long-term, I want to keep working on gaze and cognition tools within biomedical engineering and HCI.

Contact.

If you’re working on gaze, cognition tools, terminal UIs, or related projects, I’m happy to chat or share what I’ve tried so far.

Email: ck.zhang26@gmail.com

GitHub: github.com/ck-zhang